Want to predict how much revenue your customers will bring in the future? Python's Lifetimes library makes it simple to calculate Customer Lifetime Value (CLV) using transaction data. Here's a quick overview of what you'll learn:

- What is CLV? It's the total revenue a customer generates over their relationship with your business.

- Why does it matter? It helps allocate marketing budgets, improve retention, and identify high-value customers.

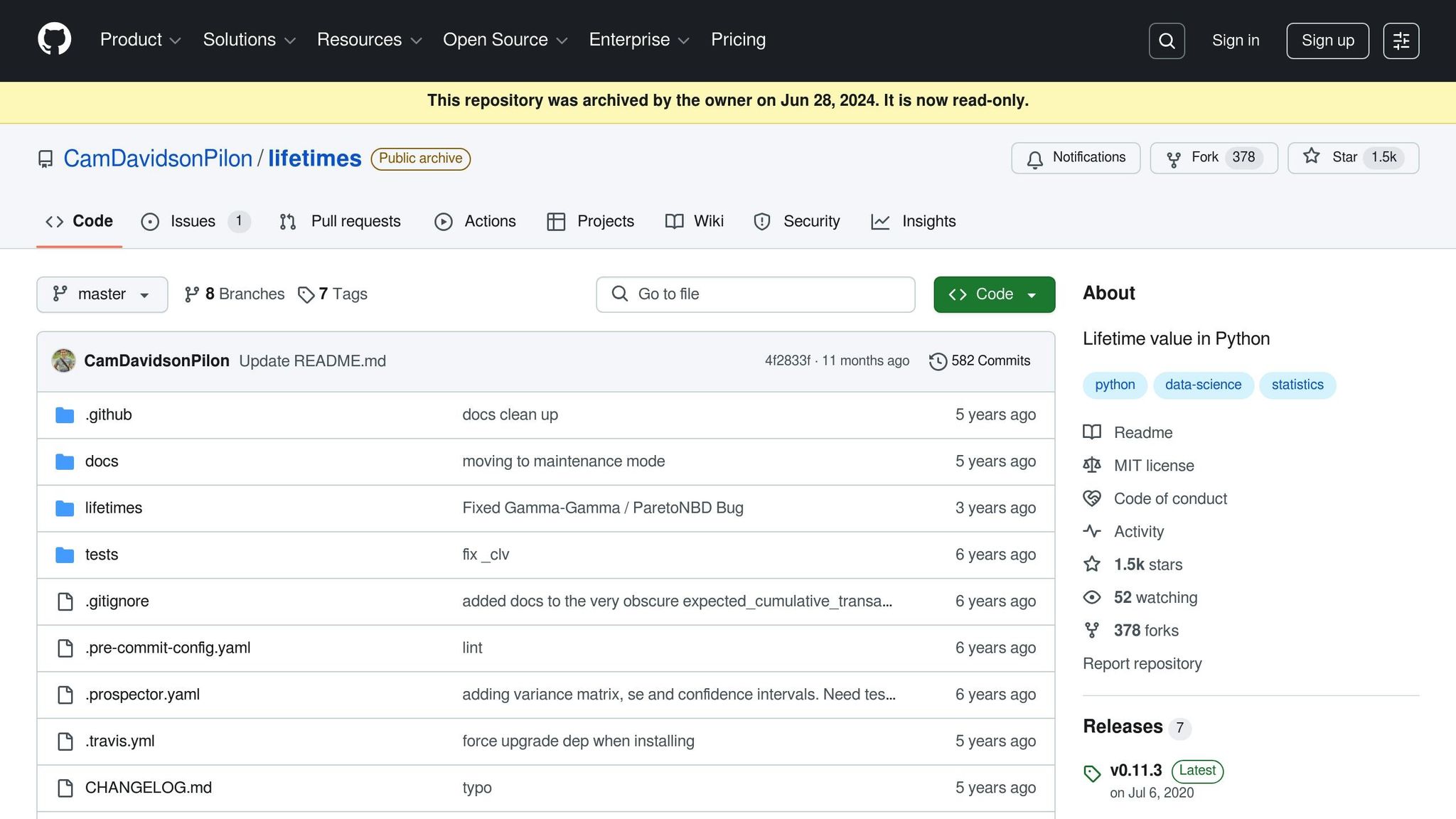

- How does Lifetimes help? It uses advanced models like BG/NBD (for purchase frequency) and Gamma-Gamma (for transaction value).

Key Steps:

- Install Lifetimes:

pip install lifetimes pandas numpy matplotlib seaborn scikit-learn. - Prepare Data: Organize transaction data with fields like customer ID, transaction date, and monetary value.

- Model CLV:

- Use BG/NBD to predict purchase frequency.

- Use Gamma-Gamma to estimate transaction value.

- Analyze Results: Export predictions, categorize customers by value tiers, and use the insights to guide business decisions.

Example Use Cases:

- E-commerce platforms

- Retail businesses

- Subscription services

CLV predictions can transform how you approach customer retention and marketing strategies. Ready to dive in? Here's everything you need to know.

Full Tutorial: Customer Lifetime Value (CLV) in Python (Feat. Lifetimes + Pycaret)

Setup and Data Preparation

Getting your environment and data ready is crucial for accurate Customer Lifetime Value (CLV) predictions. Here's a step-by-step guide to ensure your setup is smooth and your data is formatted correctly.

Installation Steps

Start by installing the necessary libraries. Run these commands in your terminal:

pip install lifetimes

pip install pandas numpy matplotlib seaborn scikit-learn

If you'd like to enhance your predictions further, consider adding PyCaret:

pip install pycaret

Required Data Format

The Lifetimes library requires your transaction data to follow a specific structure. Make sure your dataset includes these fields:

| Field | Description | Example |

|---|---|---|

| Customer ID | Unique identifier | 1001 |

| Transaction Date | Timestamp | 01/15/2023 |

| Monetary Value | Transaction amount | $22.35 |

Your transaction log should look something like this:

customer_id | transaction_date | monetary_value

----------- | ---------------- | --------------

1001 | 01/15/2023 | 22.35

1002 | 01/16/2023 | 11.77

1001 | 02/20/2023 | 15.50

Data Cleanup Steps

To ensure your data is ready for analysis, follow these preparation steps:

-

Convert Dates

Ensure all transaction dates are in a consistent datetime format:

df['transaction_date'] = pd.to_datetime(df['transaction_date']) -

Handle Monetary Values

Remove extreme outliers that could skew your results:

upper_limit = df['monetary_value'].quantile(0.99) df['monetary_value'] = df['monetary_value'].clip(upper=upper_limit) -

Aggregate Transactions

If your data includes item-level details, group them into transaction summaries:

df = df.groupby(['customer_id', 'transaction_date']).agg({'monetary_value': 'sum'}).reset_index() -

Create RFM Summary

Convert your cleaned transaction data into the Recency, Frequency, and Monetary (RFM) format that Lifetimes requires:

This step organizes your data into a format suitable for CLV modeling.from lifetimes import utils summary = utils.summary_data_from_transaction_data( transactions_df=df, customer_id_col='customer_id', datetime_col='transaction_date', monetary_value_col='monetary_value', observation_period_end='12/31/2023' )

Finally, if you're analyzing monetary value using the Gamma-Gamma model, filter your data to focus on customers with repeat purchases:

returning_customers = summary[summary['frequency'] > 0]

At this point, your dataset is fully prepped and ready for building CLV models.

Creating CLV Models

To predict Customer Lifetime Value (CLV), you can combine RFM analysis with probabilistic models using the Lifetimes library. Here's how to build and refine your CLV prediction models step by step.

Building the RFM Matrix

First, create an RFM matrix to summarize customer behavior:

from lifetimes import utils

# Create the RFM matrix

rfm_matrix = utils.summary_data_from_transaction_data(

transactions_df,

customer_id_col='customer_id',

datetime_col='transaction_date',

monetary_value_col='monetary_value',

observation_period_end='2025-05-13'

)

# Filter for returning customers

active_customers = rfm_matrix[rfm_matrix['frequency'] > 0]

This matrix organizes data into recency (time since last purchase), frequency (number of purchases), T (customer age), and monetary value (average transaction value). With this foundation, you can move on to predicting future purchases using the BG/NBD model.

Setting Up the BG/NBD Model

The Beta-Geometric/NBD model (BG/NBD) helps estimate how often customers will make purchases in the future:

from lifetimes import BetaGeoFitter

# Fit the BG/NBD model

bgf = BetaGeoFitter(penalizer_coef=0.0)

bgf.fit(

active_customers['frequency'],

active_customers['recency'],

active_customers['T']

)

# Predict purchases for the next 90 days

prediction_period = 90

predicted_purchases = bgf.conditional_expected_number_of_purchases_up_to_time(

prediction_period,

active_customers['frequency'],

active_customers['recency'],

active_customers['T']

)

This model calculates expected purchases for a given time frame, such as 90 days. Once purchase frequency is modeled, you can incorporate transaction value predictions using Gamma-Gamma analysis.

Adding Gamma-Gamma Analysis

The Gamma-Gamma model forecasts transaction values, which are crucial for completing CLV predictions:

from lifetimes import GammaGammaFitter

# Fit the Gamma-Gamma model

ggf = GammaGammaFitter(penalizer_coef=0.0)

ggf.fit(

active_customers['frequency'],

active_customers['monetary_value']

)

# Calculate 12-month CLV

time_horizon = 12

clv_predictions = ggf.customer_lifetime_value(

bgf,

active_customers['frequency'],

active_customers['recency'],

active_customers['T'],

active_customers['monetary_value'],

time=time_horizon,

discount_rate=0.01

)

# Add predictions to the customer matrix

active_customers['predicted_clv'] = clv_predictions

By combining the BG/NBD and Gamma-Gamma models, you can estimate both the frequency and value of future transactions, giving you a complete picture of each customer's lifetime value.

| Model Component | Purpose | Key Metrics |

|---|---|---|

| RFM Matrix | Summarizes customer behavior | Recency, Frequency, Monetary value |

| BG/NBD | Predicts purchase frequency | Expected purchases, Churn probability |

| Gamma-Gamma | Estimates transaction value | Average profit per transaction |

This approach provides a structured way to analyze customer behavior and forecast their lifetime value, helping businesses make data-driven decisions.

sbb-itb-5174ba0

Testing Model Accuracy

Before using CLV predictions for business decisions, it's crucial to ensure their accuracy. Here's how you can measure and refine your model's performance.

Measuring Accuracy

Start by splitting your data into calibration and holdout periods, then calculate key metrics to evaluate the model's performance:

from sklearn.metrics import mean_absolute_error, mean_squared_error

import numpy as np

# Define calibration and holdout periods

calibration_end_date = '02/13/2025'

holdout_end_date = '05/13/2025'

# Calculate accuracy metrics

# actual_purchases = values from the holdout period

# predicted_purchases = model's forecasted values

mae = mean_absolute_error(actual_purchases, predicted_purchases)

rmse = np.sqrt(mean_squared_error(actual_purchases, predicted_purchases))

mape = np.mean(np.abs((actual_purchases - predicted_purchases) / actual_purchases)) * 100

print(f"Model Performance Metrics:")

print(f"MAE: ${mae:.2f}")

print(f"RMSE: ${rmse:.2f}")

print(f"MAPE: {mape:.1f}%")

Here’s what these metrics tell you:

| Metric | What It Measures |

|---|---|

| MAE | Average absolute error |

| RMSE | Penalizes larger errors more heavily |

| MAPE | Percentage error relative to actual values |

While these numbers provide a solid foundation, complement them with visual tools to spot where the model might be falling short.

Creating Result Charts

Visualization can uncover hidden trends and highlight areas for improvement. Use the following techniques to analyze your model's predictions:

# Ensure calibration_summary is defined before running this

bgf.plot_period_transactions(calibration_summary)

# Plot frequency-recency matrix

from lifetimes.plotting import plot_frequency_recency_matrix

plot_frequency_recency_matrix(bgf, max_frequency=10, max_recency=50)

# Compare actual vs predicted monetary values

plt.figure(figsize=(10, 6))

plt.hist(actual_monetary_values, alpha=0.5, label='Actual')

plt.hist(predicted_monetary_values, alpha=0.5, label='Predicted')

plt.legend()

plt.title('Actual vs Predicted Customer Value Distribution')

plt.show()

These visualizations can reveal patterns in errors and areas where the model's performance might be improved.

Fixing Common Problems

If your model isn't performing as expected, try addressing these common challenges:

-

Data Sparsity

Customers with very few transactions can distort predictions. Filter out users with fewer than two purchases to improve data quality:min_transactions = 2 active_customers = rfm_matrix[rfm_matrix['frequency'] >= min_transactions] -

Outliers

Extreme monetary values can skew results. Cap them to a reasonable threshold:q99 = returning_customers_summary['monetary_value'].quantile(0.99) returning_customers_summary['monetary_value_capped'] = returning_customers_summary['monetary_value'].clip(upper=q99) -

Model Convergence

If the model struggles to converge, increase the penalizer coefficient and refit:bgf = BetaGeoFitter(penalizer_coef=0.01) bgf.fit(...)

Finally, remember to retrain your model regularly as new data becomes available. This ensures your predictions stay relevant and actionable.

Using CLV Predictions

Exporting Results

You can export your Customer Lifetime Value (CLV) predictions for practical business applications. Here's how:

# Generate the CLV prediction dataset

clv_predictions = ggf.customer_lifetime_value(

bgf,

returning_customers_summary['frequency'],

returning_customers_summary['recency'],

returning_customers_summary['T'],

returning_customers_summary['monetary_value'],

time=12,

discount_rate=0.01

)

# Combine predictions with the customer summary dataset

customer_predictions = pd.concat([

returning_customers_summary,

clv_predictions.rename('predicted_clv')

], axis=1)

# Categorize customers into tiers based on their predicted CLV

customer_predictions['value_tier'] = pd.qcut(

customer_predictions['predicted_clv'],

q=3,

labels=['Low', 'Medium', 'High']

)

# Export predictions to CSV and Excel formats

customer_predictions.to_csv('clv_predictions.csv', index=False)

customer_predictions.to_excel('clv_predictions.xlsx', sheet_name='CLV Predictions')

For database integration, you can save the predictions directly into your database:

from sqlalchemy import create_engine

# Create a connection to the database

engine = create_engine('postgresql://user:password@localhost:5432/customer_db')

# Export the predictions to a database table

customer_predictions.to_sql(

'customer_lifetime_value',

engine,

if_exists='replace',

index=False

)

Model Maintenance

Once your predictions are exported, it's important to keep your CLV models up-to-date and running smoothly. Here are a few steps to ensure ongoing accuracy:

- Regular Retraining Schedule

Set up a routine to retrain your CLV model using fresh data. For example, you can use an automated workflow with Airflow:

from airflow import DAG

from airflow.operators.python_operator import PythonOperator

from datetime import datetime

def retrain_clv_model():

# Load updated transaction data

# Retrain the model with new parameters

# Save updated predictions

pass

# Define the DAG for monthly retraining

dag = DAG(

'clv_model_retraining',

schedule_interval='@monthly',

start_date=datetime(2025, 5, 1)

)

# Define the retraining task

retrain_task = PythonOperator(

task_id='retrain_clv_model',

python_callable=retrain_clv_model,

dag=dag

)

- Performance Monitoring

Keep an eye on metrics like Mean Absolute Error (MAE), Root Mean Square Error (RMSE), and prediction bias. If these metrics start to deviate from historical benchmarks, review your model's parameters and input data.

- Data Quality Checks

Ensure your transaction data remains reliable and accurate. Here's a quick validation function:

def validate_transaction_data(df):

missing_pct = df.isnull().sum() / len(df) * 100

date_range = df['date'].max() - df['date'].min()

value_stats = df['monetary_value'].describe()

return {

'missing_data': missing_pct,

'date_coverage': date_range.days,

'value_distribution': value_stats

}

Additional Tools

To enhance your CLV predictions further, consider using advanced analytics tools. These can include:

- Real-time analytics dashboards for monitoring customer behavior.

- Campaign performance tracking to measure the impact of marketing efforts.

- Automated CLV reporting for streamlined insights.

- Business intelligence visualization tools to turn data into actionable strategies.

These resources can help you take your CLV insights and use them to drive smarter marketing decisions and better customer engagement.

Summary

This guide has shown how Lifetimes simplifies Customer Lifetime Value (CLV) prediction by combining RFM (Recency, Frequency, Monetary) analysis with probabilistic modeling. Using methods like BG/NBD and Gamma-Gamma, the library provides a structured way to forecast customer value.

For example, the implementation with the CDNOW dataset illustrates how Lifetimes processes transaction data:

from lifetimes.datasets import load_cdnow_summary_data_with_monetary_value

from lifetimes import BetaGeoFitter, GammaGammaFitter

data = load_cdnow_summary_data_with_monetary_value()

returning_customers = data[data['frequency'] > 0]

bgf = BetaGeoFitter()

bgf.fit(returning_customers['frequency'],

returning_customers['recency'],

returning_customers['T'])

To ensure accurate predictions, consider the following tips:

- Define clear observation periods in your dataset.

- Be mindful of performance challenges with large datasets, as the library operates on a single-threaded design.

- Regularly retrain your models to keep predictions relevant.

FAQs

How do I prepare my transaction data for the Lifetimes library in Python?

To get the most out of the Lifetimes library, your transaction data needs to follow a specific structure. Make sure your dataset includes these essential fields:

- Customer ID: A unique identifier assigned to each customer.

- Transaction Date: The date of every transaction, formatted as

YYYY-MM-DD. - Monetary Value: The total amount spent during each transaction (e.g.,

$123.45).

Before diving into analysis, you might need to preprocess your data. This often involves aggregating transactions at the customer level and converting dates into a datetime format using Python's pandas library. Getting the formatting right is key to generating precise customer lifetime value (CLV) predictions.

What’s the difference between the BG/NBD and Gamma-Gamma models for predicting Customer Lifetime Value (CLV)?

The BG/NBD model (Beta Geometric/Negative Binomial Distribution) is designed to forecast how often a customer will make repeat purchases and when they might stop buying altogether. It primarily looks at transaction frequency and customer retention, making it useful for understanding purchasing patterns over time.

The Gamma-Gamma model focuses on predicting the monetary value of those transactions. It assumes that while average transaction values differ among customers, each individual customer tends to spend consistently.

When used together, these models offer a powerful combination: the BG/NBD predicts the number of future purchases, while the Gamma-Gamma model estimates the revenue from those purchases. This pairing provides a more detailed and accurate picture of Customer Lifetime Value (CLV).

How can I improve the accuracy of my CLV predictions if my model isn't performing well?

If your customer lifetime value (CLV) model isn't hitting the mark, there are a few ways to fine-tune it for better results:

- Clean up your data: Start by ensuring your data is accurate and up-to-date. Eliminate duplicate entries, fill in missing information, and make sure the customer behavior data you're using reflects current trends.

- Tweak model settings: Experiment with the parameters in the Lifetimes library. Adjusting things like penalizer coefficients or frequency/recency thresholds could lead to better performance.

- Group your customers: Not all customers behave the same way. Try segmenting them based on their behavior - like high-value versus low-value buyers - and create separate models for each group to better capture their unique purchasing patterns.

- Keep an eye on performance: Regularly check how your model is doing with metrics like RMSE or MAE. If you notice a big gap between predictions and actual outcomes, revisit your assumptions and make the necessary adjustments.

By consistently refining these areas, you’ll improve the accuracy of your CLV model and be able to make smarter, data-driven decisions for your business.