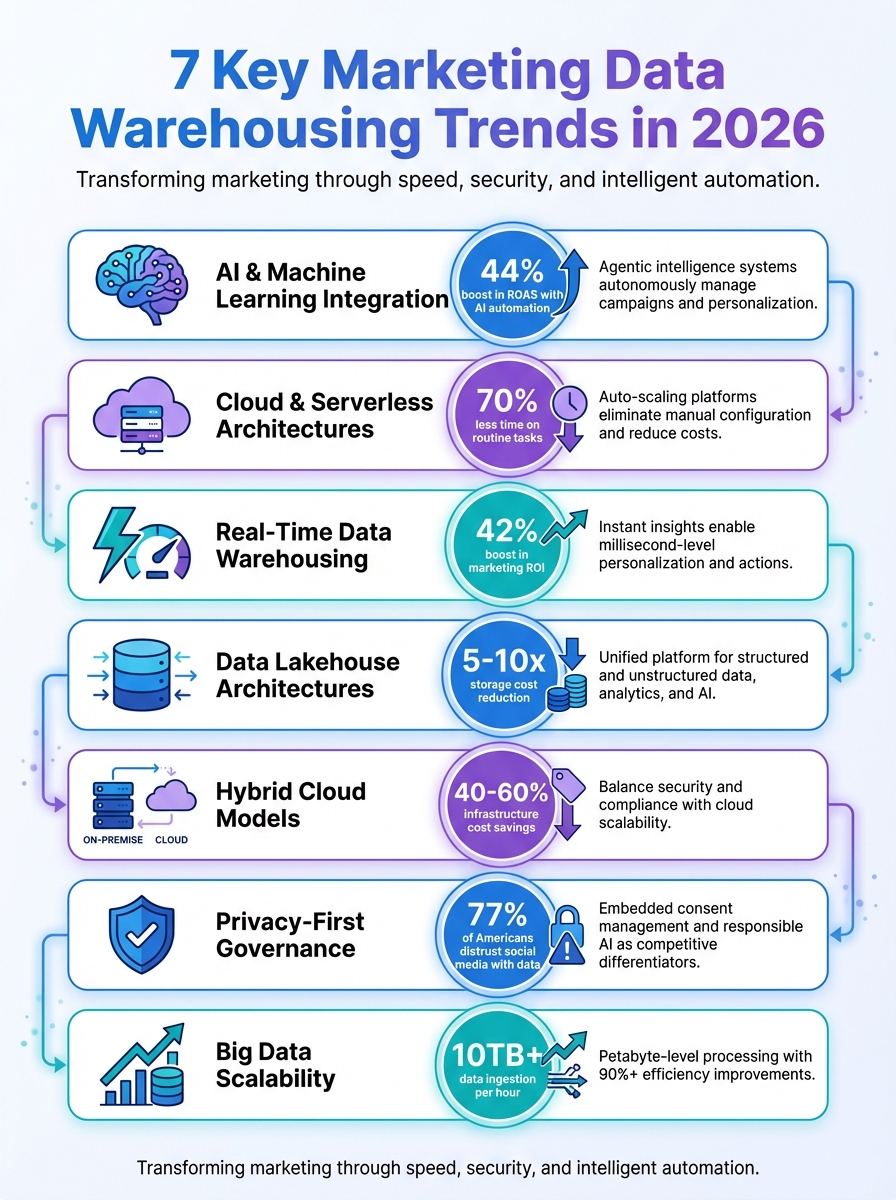

Marketing data warehousing in 2026 is all about speed, integration, and smarter decision-making. With AI, real-time analytics, and privacy-focused tools leading the charge, businesses are transforming how they handle and act on data. Here's a quick rundown of the key trends shaping the future:

- AI Automation: Data warehouses now manage tasks like campaign optimization and personalization without human input.

- Real-Time Analytics: Instant insights allow for actions like on-site personalization within milliseconds.

- Cloud and Serverless Systems: Faster, more scalable platforms reduce costs and manual work.

- Lakehouse Architectures: Unified systems for structured and unstructured data simplify analytics and machine learning.

- Hybrid Cloud Models: Combine on-premises security with cloud scalability for compliance and performance.

- Privacy-First Governance: Tools ensure data quality KPIs, enforce consent, and build trust with customers.

- Big Data Scalability: Advanced platforms handle petabyte-level data and deliver faster query results.

These trends are reshaping how companies use data to drive marketing strategies, improve ROI, and meet evolving customer expectations. Utilizing the top marketing analytics tools is essential for navigating these shifts.

7 Marketing Data Warehousing Trends for 2026

The Case for Real-Time Data Warehousing

sbb-itb-5174ba0

1. AI and Machine Learning Integration

By 2026, marketing data warehouses have evolved into agentic intelligence systems. These systems autonomously manage complex tasks like multi-step campaigns, personalizing customer journeys, and making real-time decisions - all without requiring human input. This shift turns data warehouses into active engines of optimization rather than just passive storage. It’s a game-changer for how data processing is approached.

New technologies, such as Zero ETL patterns and managed pipelines like Snowflake Openflow and AWS Glue, now allow instant data replication. This eliminates the need for outdated batch jobs. For marketing analysts, this means reclaiming the 10–15 hours per week they used to spend manually pulling reports and normalizing data. Instead, they can focus their energy on strategic planning.

Impact on Marketing Data Efficiency

With conversational analytics - also known as Agentic BI - users can ask questions in plain language and receive instant visualizations. Automated governance ensures data accuracy directly in platforms like Snowflake Horizon and Unity Catalog. Roman Vinogradov, VP of Product at Improvado, highlights the shift:

Instead of building dashboards for every granularity, you get ad-hoc snapshots with the insights you need in the moment. It's not static anymore.

This automation eliminates the need for static dashboards and catches potential data issues before they affect analysis. While the systems handle the heavy lifting, humans remain responsible for making the critical business decisions.

Scalability for Enterprise Use

Automation has also introduced the concept of data gravity, which is reshaping enterprise data architecture. Instead of sending sensitive customer data to external AI vendors, companies now bring AI models directly to their unified data systems. This approach enhances security, reduces operational costs, and keeps all data in one centralized location for model training.

One example is Turkish home goods retailer Karaca, which implemented AI-driven automation for Google Shopping campaigns between May 2024 and February 2025. The system autonomously handled bidding strategies and budget allocation across a catalog of over 2,000 products. The results? A 44% boost in ROAS and a 31% increase in revenue.

Support for Real-Time Analytics

Real-time event streaming is taking agentic intelligence to the next level. For instance, users can now be added to retargeting audiences within 15 minutes of visiting a website, leading to 15–20% improvements in cost-per-acquisition.

Databricks offers a compelling example of this evolution. Between 2025 and 2026, they implemented a marketing lakehouse using Unity Catalog and a medallion architecture (Bronze, Silver, Gold layers). This setup powered "Marge", an internal AI-driven analytics assistant that uncovers campaign insights and enables seamless omnichannel automation.

Integration with Existing Marketing Tools

The shift toward composable data stacks - where best-of-breed tools are connected via APIs with AI acting as the orchestration layer - is becoming the new standard. By 2024, 90% of companies had adopted some form of MACH architecture (Microservices, API-first, Cloud-native, Headless).

For example, Acronym developed a unified operating model for a client managing multiple brands with separate Salesforce and Adobe/Google Analytics systems between 2025 and 2026. By embedding "knowledge blocks" about the client’s operations into large language models (LLMs), AI agents could provide enhanced media planning and deeper analysis. This reduced wasted spend and eliminated targeting overlaps.

For mid-market companies, these AI-ready analytics stacks remain accessible and cost-effective. They focus on delivering value through seamless integration and minimizing manual work, creating a pathway for advanced analytics solutions tailored to enterprise needs.

2. Cloud Adoption and Serverless Architectures

As businesses push further into AI-powered automation, they’re also diving into cloud-native, serverless architectures to streamline operations and boost efficiency. Marketing teams, for example, are moving away from manually managed data warehouses to serverless systems that handle patching, tuning, and scaling automatically. This shift means database administrators now spend 70% less time on routine tasks when working in cloud-native environments.

The advantages of cloud-based systems are hard to ignore. Analytical queries in these environments run 10-100x faster than their on-premises counterparts, and scaling to petabyte-level analytics no longer requires manual configuration. By 2025, 75% of enterprises are expected to have migrated to cloud-based systems for advanced data management.

Impact on Marketing Data Efficiency

Cloud-native platforms bring a new level of flexibility by separating storage, compute, and metadata. This allows marketing teams to scale computing power instantly during high-demand periods, like Black Friday, and automatically pause resources after just 5–10 minutes of inactivity.

Serverless models also eliminate the need for teams to manually handle ETL (Extract, Transform, Load) processes, which traditionally consumed 60–80% of data engineering time. Meanwhile, on-premises solutions often cost 3–5x more when you factor in maintenance, security, and engineering hours.

Scalability for Enterprise Use

Serverless data warehouses like Google BigQuery and Snowflake are built to handle the dynamic needs of enterprises. Whether it’s a major campaign launch or seasonal traffic spikes, these platforms offer instant scalability. Notably, 62% of organizations now use their data warehouse as the central "source of truth" for decision-making.

Daniel Avancini, Chief Data Officer at Indicium, highlights the benefits of this approach:

Object storage combined with serverless compute offers elasticity, simplicity and lower cost. An outdated array of Hadoop services no longer fits the modern data landscape.

The pricing models for these platforms have also evolved. Instead of large upfront costs, companies now pay based on usage. Snowflake, for instance, charges per-second compute usage plus storage, while BigQuery uses a per-query pricing model based on bytes scanned. For enterprises with predictable needs, discounts of up to 40% are available. This flexibility not only lowers costs but also supports the rapid data processing required for real-time analytics.

Support for Real-Time Analytics

Serverless architectures are a game-changer for real-time analytics. They support Change Data Capture (CDC) and event streaming, enabling businesses to process customer behaviors as they happen. This capability allows real-time decisions, such as calculating price sensitivity or churn risk, within 100-300 milliseconds. Companies leveraging real-time data processing report 23% higher revenue growth compared to those using batch processing.

Integration with Existing Marketing Tools

Beyond real-time processing, cloud-native warehouses integrate effortlessly with modern marketing tools like CRMs, CDPs, and website analytics tools. Through APIs and native integrations, these systems create a unified data environment, a concept often referred to as "data gravity". By consolidating data into a single platform, businesses can make faster decisions and avoid the inefficiencies of moving data across multiple systems.

Jeff Lee, Head of Community and Digital Strategy at ASUS, shares how this transformation has impacted their operations:

Improvado helped us gain full control over our marketing data globally... Today, we can finally build any report we want in minutes due to the vast number of data connectors and rich granularity.

3. Real-Time Data Warehousing and Streaming Analytics

The transition from batch processing to real-time streaming is reshaping how marketing teams react to customer behavior. Traditional data warehouses, which updated on a nightly or weekly basis, introduced delays that often left insights stale. Now, with real-time streaming, those delays are gone, allowing marketers to act on insights immediately. As Evren Eryurek, PhD, Director of Product Management at Google Cloud, explains:

Real-time data from event sources provides a high-value opportunity to act on a perishable insight within a tight window. That means businesses need to act fast.

By 2025, more than 25% of global data is expected to be real-time. This shift is critical because 88% of consumers are more likely to make a purchase when brands personalize their experiences in real time. For example, a customer lingering near the checkout page or abandoning their cart presents a fleeting chance to engage.

Impact on Marketing Data Efficiency

Real-time data warehousing removes the delays that once hindered traditional systems, enabling marketers to respond instantly to customer behavior. Businesses using real-time analytics report a 42% boost in marketing ROI and a 30% drop in customer acquisition costs. Modern systems leverage tools like Change Data Capture (CDC) and event streaming platforms such as Apache Kafka to process customer actions - clicks, purchases, and more - as they happen. This allows for immediate actions, like halting ads for customers who’ve already made a purchase, preventing unnecessary spending. These systems are also designed to handle high demand, ensuring smooth operations even during peak periods.

Scalability for Enterprise Use

Real-time streaming doesn’t just improve efficiency - it also supports massive scalability for enterprise needs. Cloud-native warehouses separate storage from compute, letting businesses instantly scale up resources during high-traffic events like Black Friday or major campaign launches. These platforms can handle over 10TB of data per hour without requiring specialized hardware. Once traffic subsides, compute resources automatically scale down, controlling costs by pausing unused capacity after just 5–10 minutes of inactivity. Companies adopting real-time processing see 23% higher revenue growth compared to those sticking to batch processing. This architecture also accommodates both structured and unstructured data, solving synchronization issues that previously arose from managing separate systems.

Support for Real-Time Analytics

Modern platforms are adopting "Zero ETL" patterns, which allow data to be replicated instantly, enhancing operational speed. AI-powered tools further streamline operations by reducing pipeline maintenance by 70% through automated anomaly detection and self-healing processes.

Megan DeGruttola from Twilio Segment highlights the importance of keeping data fresh for AI:

AI doesn't just need lots of good data, it needs fresh, relevant, and context-rich data - in an instant.

With 97% of brands planning to increase their AI investments by 2030, having real-time data capabilities has shifted from being a nice-to-have to an absolute necessity.

Integration with Existing Marketing Tools

Real-time data doesn’t just improve speed - it also integrates seamlessly with marketing systems. By using event-driven architectures and direct database connections, real-time warehouses enable near-instant communications, such as sending cart abandonment alerts within seconds instead of hours. For 62% of organizations, the data warehouse has become the central "source of truth". Tools like the Marketing Analytics Tools Directory help businesses compare options for real-time analytics, campaign tracking, and business intelligence. By consolidating data into unified platforms, companies eliminate the inefficiencies of siloed systems while retaining the flexibility needed for instant personalization.

4. Data Lakehouse Architectures

Lakehouse architectures are revolutionizing how businesses handle data by combining the structured approach of warehouses with the flexibility of data lakes. This unified model supports all types of data, making it a powerful tool for managing analytics, machine learning, and AI. Daniel Avancini, Chief Data Officer at Indicium, highlights its impact:

The Lakehouse has emerged as the architectural north star. It gives organizations a single platform for structured and unstructured data, analytics, machine learning and AI training.

For marketing teams, this means consolidating everything from campaign metrics to customer images in one streamlined platform.

Impact on Marketing Data Efficiency

Lakehouses bring heightened efficiency by tracking data at the file level instead of entire directories. This approach speeds up query planning, ensures precise commits, and even enables "time travel" without added storage costs. Using columnar formats like Parquet or ORC can slash storage expenses by five to ten times. Meanwhile, database administrators benefit from features like automatic tuning and patching, cutting their maintenance workload by as much as 70%.

Another game-changer is Zero ETL, which allows for instant data replication between operational and analytical systems. These capabilities set the stage for real-time insights that scale effortlessly.

Scalability for Enterprise Use

Lakehouse architectures shine when it comes to scaling. By decoupling storage from compute, marketing teams can adjust resources independently. Data is stored in affordable cloud object storage solutions like Amazon S3 or ADLS, while compute power scales up or down based on campaign demands. Modern formats like Apache Iceberg can handle billions of files, enabling cloud-native analytical queries that run up to 100 times faster than older systems.

This flexibility extends to multiple compute engines - such as Spark, Trino, and Flink - accessing the same data for business intelligence, machine learning, or real-time streaming. Take ASUS, for example: by centralizing their marketing data in BigQuery, they integrated data from 15 sources and mapped 50 fields. Reports that previously took days now generate in minutes.

Support for Real-Time Analytics

Lakehouses merge batch and streaming workloads into a single pipeline. This shift enables continuous micro-batch processing, supported by exactly-once commits and real-time file management. Companies adopting these unified pipelines report that their feature development cycles are up to 50% faster.

The demand for real-time analytics is fueling rapid growth in the lakehouse market, which is expanding at 22.9% annually and is expected to surpass $66 billion by 2033. Change-Data-Capture (CDC) tools play a key role here, feeding live data from CRMs and ad platforms into lakehouse tables. This ensures marketing teams always have access to the most current information.

Integration with Existing Marketing Tools

Lakehouse architectures also excel at integrating with existing marketing tools. Open table formats like Apache Iceberg, Delta Lake, and Apache Hudi allow multiple query engines and BI tools to access centralized data simultaneously. This centralized approach simplifies data management.

Customer Data Platforms are evolving too, moving from isolated silos to orchestration layers built on top of lakehouse foundations. Resources like the Marketing Analytics Tools Directory help businesses compare solutions for real-time analytics and campaign tracking, ensuring that marketing teams can switch tools without disrupting their centralized data setup.

5. Hybrid Cloud and On-Premise Models

Hybrid architectures offer a smart balance between the security of on-premises systems and the scalability of cloud-based analytics. By keeping sensitive customer data on-premises while leveraging cloud resources for large-scale analytics, marketing teams can meet strict regulations like GDPR and CCPA without compromising performance. Chris Thomas, Principal and U.S. Cloud Leader at Deloitte Consulting LLP, highlights this approach:

A hybrid cloud-based data platform enables organizations to achieve scale, security, and governance while undergoing a data-driven transformation.

This setup is becoming increasingly essential - 83% of organizations expect their AI workflows to grow by 2026, requiring infrastructure that can quickly adapt to evolving needs. Beyond compliance, hybrid models streamline marketing data workflows, improving overall efficiency.

Impact on Marketing Data Efficiency

One of the standout benefits of hybrid models is the separation of operational systems from analytical workloads. This ensures that marketing queries don’t slow down essential systems like CRM or ERP platforms used for daily operations. Sudipta Ghosh, Partner at PwC India, explains:

The first advantage is decoupling the system of records from the system of analytics. You don't want to slow down that system [of records] when pulling data from it for reports and analytics.

Companies using hybrid lakehouse architectures have reported savings of 40% to 60% in total data infrastructure costs by optimizing workload allocation. On-premises servers handle latency-sensitive tasks, such as personalization (within 100–300 milliseconds), while the cloud manages large-scale trend analysis and AI model training. This separation strengthens the unified data foundation that modern marketing strategies rely on.

Scalability for Enterprise Use

Hybrid models are particularly effective in managing fluctuating demands. Enterprises maintain a stable on-premises capacity for routine reporting and scale up to the cloud during peaks, such as seasonal marketing campaigns or exploratory analytics projects. This incremental migration approach avoids the risks of a disruptive "big bang" transition. Additionally, hybrid setups reduce maintenance burdens compared to traditional on-premises systems. For example, cloud storage costs range from $23 to $40 per TB per month, making it an economical option for archiving historical campaign data while keeping active datasets on-premises.

Support for Real-Time Analytics

Hybrid environments shine when it comes to real-time analytics. Tools like Change Data Capture (CDC) stream database changes from on-premises systems to cloud analytics platforms without affecting the performance of the source systems. This enables instant actions like cart recovery and dynamic pricing while staying compliant with regulations. Companies using real-time data processing achieve 23% higher revenue growth compared to those relying solely on batch processing. Additionally, hybrid models support federated queries through tools like Redshift Spectrum or BigQuery Omni, allowing teams to query data across both environments without transferring it, which reduces latency and egress costs.

Integration with Existing Marketing Tools

The rise of composable stacks makes it easier for marketers to integrate specialized cloud tools with their existing on-premises systems. Open table formats like Apache Iceberg allow different compute engines to access the same data simultaneously, providing flexibility. As 75% of enterprises are expected to adopt cloud-based data management solutions by 2025, hybrid models serve as a crucial transitional phase. Marketing teams can even deploy AI models directly within their governed data environments, avoiding the risks of exporting sensitive information to external vendors. Resources like the Marketing Analytics Tools Directory help teams identify tools for real-time analytics and campaign tracking that align with hybrid architectures, keeping data secure while enabling access to advanced features.

6. Data Governance and Privacy-Preserving Analytics

As automation and real-time data processing continue to evolve, data governance has emerged as more than just a compliance tool - it's now a competitive advantage for marketing teams. With trust in tech companies at alarmingly low levels - 77% of Americans don't trust social media executives to safeguard their data, and 70% are wary of AI companies - organizations are embedding privacy measures into every layer of their data systems. Modern governance frameworks are also streamlining operations, saving teams 90–100 hours per week on manual data transformation tasks, freeing them up for more strategic work.

Impact on Marketing Data Efficiency

The rise of integrated governance tools is reshaping how marketing teams handle data. Platforms like Snowflake Horizon and Unity Catalog now come equipped with features like quality checks, anomaly detection, and usage monitoring built directly into their systems. This creates a unified, reliable dataset that eliminates disputes over performance metrics and ensures everyone is working from the same, accurate information.

Heather Roth, Director of Digital Strategy at Slalom, underscores the importance of this shift:

Consent, transparency and responsible AI are not just checkboxes, they are competitive differentiators. Organizations must embed privacy and governance into every layer of activation, AI and personalization.

One key innovation is the use of data contracts - code-based agreements that define schemas and enforce quality standards. These contracts ensure consistency across decentralized systems and catch policy violations in real time, preventing costly "data downtime" before it disrupts campaigns. These advancements make it easier to integrate privacy-first practices into marketing workflows without sacrificing efficiency.

Integration with Existing Marketing Tools

Privacy-preserving analytics is changing how sensitive customer information is managed. Instead of sending data to external AI models, many organizations now deploy AI models within their own data systems. This approach enhances security, prevents proprietary data from being used to train external systems, and allows teams to monitor AI outputs for potential bias.

Server-side tagging has become another critical tool, acting as a checkpoint to filter data and enforce consent rules before sharing information with external platforms. For instance, when a user opts out, centralized systems can instantly update all connected tools to reflect that preference. Resources like the Marketing Analytics Tools Directory help teams identify solutions that support privacy-first setups, ensuring compliance while still delivering robust campaign tracking and audience insights.

7. Big Data Technologies and Scalability Optimization

As marketing datasets expand to petabyte levels, cloud-native data warehouses like Snowflake, Google BigQuery, and Amazon Redshift are stepping up with Massively Parallel Processing (MPP). These systems separate storage from compute, delivering lightning-fast performance and supporting the unified data platforms that are now the backbone of modern marketing operations.

Impact on Marketing Data Efficiency

Big data architectures are transforming efficiency. Features like columnar storage reduce input/output operations by more than 90% and improve compression rates by 5–10×. On top of that, cloud-native warehouses are capable of ingesting over 10 terabytes of data per hour. This level of automation means database administrators now spend about 70% less time on routine tasks like tuning and patching.

Scalability for Enterprise Use

Elastic auto-scaling ensures marketing dashboards stay responsive during high-demand periods, such as major campaign launches. These systems adjust compute resources in real time, keeping performance smooth. To save costs, warehouses can be configured to auto-suspend after just 5–10 minutes of inactivity, and businesses can take advantage of capacity reservation discounts of up to 40%. This type of scaling allows companies to maintain real-time insights without breaking the budget.

Support for Real-Time Analytics

The shift from traditional batch processing to Zero-ETL architectures is revolutionizing how data is handled. By using Change Data Capture (CDC) and event streaming tools like Kafka or Kinesis, businesses can continuously ingest data. Real-time processing has been shown to drive 23% higher revenue growth, while AI-powered ETL automation could cut pipeline maintenance by 70%, reducing the reliance on manual data engineering tasks that typically take up 60–80% of the workload. These advancements are key to enabling faster decision-making and more integrated analytics.

Integration with Existing Marketing Tools

Modern data warehouses act as orchestration hubs, giving Customer Data Platforms (CDPs) and engagement tools access to a unified source of truth. Tools like the Marketing Analytics Tools Directory help teams identify solutions that seamlessly integrate with big data architectures. This supports everything from real-time campaign tracking to business intelligence dashboards, ensuring marketers can leverage their data effectively across platforms.

Conclusion

By 2026, marketing data warehousing will evolve from simply generating insights to enabling autonomous actions. The trends shaping this shift - AI integration, cloud adoption, real-time analytics, data lakehouse architectures, hybrid models, privacy-focused governance, and big data scalability - highlight a strategy centered on speed, security, and intelligent automation. Heather Roth from Slalom puts it succinctly: today's data processes must deliver immediate, actionable results.

But here’s the catch: these advancements hinge on solid data foundations. With 80% of data teams spending more time preparing data than analyzing it, and 99% of enterprises struggling to define consistent business metrics across tools, prioritizing data hygiene and semantic consistency is non-negotiable. Logan Patterson, Managing Director for Marketing, Advertising, and Customer Experience at Slalom, underscores this point:

The most sophisticated stack you can comprise will ultimately fail if marketing, business, and tech teams aren't trained, incentivized, and understand how their current way of working is going to evolve.

Privacy, once a compliance checkbox, is now a competitive edge. Marketers who invest in first-party data and consent-driven personalization build trust - a critical asset in a world where 77% of Americans distrust social media executives to safeguard their data. Roth emphasizes this shift:

Consent, transparency, and responsible AI are not just checkboxes; they are competitive differentiators.

AI workflows and dynamic insight layers are enabling teams to shift their focus from manual data handling to strategic creativity. Roman Vinogradov, VP of Product at Improvado, captures this transformation:

AI isn't replacing marketing teams, but teams that know how to work with AI will outperform those that don't.

Success in this new era requires more than just technical upgrades; it demands cultural adaptation. Organizations must treat data modernization as a human-centric change initiative. Start with repeatable processes, adopt flexible architectures to avoid vendor lock-in, and run AI models on unified data platforms. Tools like the Marketing Analytics Tools Directory (https://topanalyticstools.com) can help businesses find solutions that seamlessly integrate with these architectures, enabling everything from real-time campaign tracking to advanced business intelligence dashboards.

The future belongs to those who balance cutting-edge technology with the human ability to adapt and innovate. By embracing both, organizations will lead the way in marketing performance and redefine success in 2026 and beyond.

FAQs

What’s the first step to modernize a marketing data warehouse?

To modernize a marketing data warehouse in 2026, the first step is adopting a cloud-native architecture. This shift brings benefits like elastic scalability, serverless infrastructure, and real-time performance, while eliminating the hassle and expense of upgrading physical hardware.

By combining cloud-native systems with real-time data tools, marketing teams can unify data from various sources seamlessly. This integration provides easy access to actionable insights, laying the groundwork for advanced analytics and AI-powered decision-making.

How do real-time streaming and Zero ETL work together?

Real-time streaming and Zero ETL are transforming how businesses handle data by removing the need for traditional ETL processes. With Zero ETL, analytics can be performed directly on source data in real time, skipping the usual extraction, transformation, and loading steps. Meanwhile, streaming platforms like Kafka capture and process data the moment it’s generated.

This combination ensures a smooth flow from data creation to analysis, enabling immediate insights. The result? Faster decision-making and highly personalized marketing strategies - crucial for businesses aiming to meet rapidly changing customer expectations as we approach 2026.

How can we use AI in-warehouse without risking customer privacy?

To integrate AI in warehouses while respecting customer privacy, it's essential to follow privacy-by-design principles. This means building privacy considerations into every step of your AI processes. Start with strict consent management to ensure customers are fully aware of how their data is used. Implement robust data governance practices to maintain control and oversight over data handling.

Use privacy-preserving techniques such as anonymization, pseudonymization, and encryption to protect sensitive information. Additionally, focus on first-party data strategies and ensure any data sharing is strictly consent-based. These steps not only help maintain customer trust but also ensure compliance with regulations like GDPR, enabling secure and responsible use of AI for warehouse operations.