Poor data quality can derail marketing campaigns and waste budgets. Real-time data quality monitoring tools solve this by identifying and fixing issues instantly, ensuring reliable insights for decision-making. With global data volumes reaching 180 zettabytes in 2025, manual monitoring is no longer practical. These tools validate data at the source, detect anomalies using AI, and integrate seamlessly with marketing platforms like Salesforce and Snowflake.

Key features to look for:

- Real-Time Monitoring: Catch errors at the point of data collection.

- AI-Powered Anomaly Detection: Spot issues like schema changes or null spikes automatically.

- Platform Integrations: Ensure compatibility with your tools (e.g., BigQuery, dbt).

Top Tools:

- Soda: Collaborative data contracts and a free tier for small teams.

- Monte Carlo: End-to-end AI monitoring with Salesforce integration.

- Anomalo: Machine learning for proactive issue detection.

- Bigeye: Detailed lineage tracking for enterprise needs.

- Lightup: User-friendly alerts for marketing teams.

- Talend: Hybrid pipeline management for complex systems.

- Metaplane: Quick deployment for cloud data warehouses.

Real-time monitoring ensures trustworthy data, saving time and money while improving marketing outcomes. Choose a tool based on your specific goals, integrations, and team needs.

Best Data Observability Tools for Beginners: Side-by-Side Review

sbb-itb-5174ba0

What to Look for in Real-Time Data Quality Monitoring Tools

Choosing the right monitoring tool can drastically shorten the time it takes to identify and resolve data issues. Instead of discovering problems months down the line, the right platform helps you catch them in hours. When evaluating these tools, focus on three critical features that directly influence the success of your cross-channel marketing campaigns.

Real-Time Data Integration and Monitoring

Stop bad data at the source. Effective tools validate and enforce quality rules right at the point of data capture or ingestion, ensuring errors don’t flow downstream into your marketing platforms. Look for platforms that offer in-flight transformations, allowing you to clean, reshape, and correct data in real time as it moves from collection to delivery.

Keep your data fresh for time-sensitive campaigns. Whether it’s an abandoned cart email or a flash sale notification, timely customer data is essential. Set specific service level agreement (SLA) thresholds for data delivery times to ensure campaigns are based on the most up-to-date customer actions. Advanced platforms also include data diffing features, which help pinpoint discrepancies between source and destination databases, ensuring everything stays synchronized across critical pipelines.

But catching bad data isn’t enough - tools must also identify unexpected issues as they arise.

Anomaly Detection and Alerts

Manually setting thresholds for every metric isn’t practical. Modern tools leverage unsupervised machine learning to analyze historical trends, uncover hidden patterns, and adapt to seasonal variations. These systems excel at spotting “unknown unknowns,” such as sudden spikes in null values, duplicate entries, or unexpected schema changes, before they impact your marketing dashboards or AI-driven attribution models.

"Anomalo automatically builds ML models for each dataset based on the history, patterns, and structure of the data... enabling pro-active data quality resolution which can result in improved operations, analytics and AI." - Stewart Bond, Research Vice President, IDC

Quick response times are essential when something goes wrong. The best platforms streamline root cause analysis by highlighting problem areas, such as specific columns or segments with anomalies, and providing examples of both “good” and “bad” data. This saves hours of manual troubleshooting. Additionally, multi-channel alerts - delivered through Slack, Teams, PagerDuty, or webhooks - ensure your team can respond immediately. Keep in mind that machine learning models generally require 3–14 days of historical data to start detecting anomalies effectively, with accuracy improving significantly over 30–60 days.

Finally, seamless integration with your existing tools can enhance the overall effectiveness of your monitoring efforts.

Cross-Channel Marketing Platform Integrations

A monitoring tool’s value lies in how well it fits into your existing marketing stack. Look for platforms that integrate with cloud warehouses like Snowflake and BigQuery to monitor data at rest, as well as orchestrators like Airflow and dbt to catch issues during data transformation. For example, in 2024, Magic Eden’s analytics team saved 72 hours each month by enforcing shared data definitions at the source, reducing the need for manual cleaning.

Centralized management simplifies workflows. Tools that offer a single hub for managing credentials and access levels across third-party data sources reduce friction. Integration with data catalogs like Alation makes it easier for marketers and business users - not just data engineers - to access data quality insights. Direct connections to orchestrators also allow you to detect pipeline interruptions or schema changes before they disrupt downstream marketing models.

Top Real-Time Data Quality Monitoring Tools

The tools listed below are designed to address real-time data quality challenges, each offering unique features to meet varying needs. From small teams to large enterprises, these platforms provide essential capabilities like anomaly detection, lineage tracking, and automated setup. Trusted by leading companies across industries, they can help ensure your data remains reliable and actionable.

Soda

Soda takes a collaborative approach to data quality with its "data contracts" feature, which aligns data producers and consumers by setting clear expectations. This reduces errors and miscommunication downstream. Its three-tier architecture - Core, Agent, and Cloud - delivers end-to-end visibility, while its YAML-based SodaCL syntax is easy for both technical and non-technical users to adopt.

The platform’s AI algorithms automatically identify anomalies, and its free tier supports up to three datasets, making it a great option for smaller teams testing real-time monitoring. Paid plans for larger teams start at $8 per dataset per month when billed annually.

"Soda's blend of simplicity, observability, and collaboration makes it one of the most effective data quality monitoring tools for agile teams." - OvalEdge Team

Brands like HelloFresh use Soda to maintain data quality across operations and customer analytics. It integrates seamlessly with major data warehouses like Snowflake, BigQuery, and Databricks, where marketing and operational data often reside.

Monte Carlo

Monte Carlo is a pioneer in the data observability space, trusted by over 150 enterprises to monitor data health. For companies where downtime directly affects revenue, this platform offers automated quality coverage across the entire data lifecycle, from ingestion to consumption.

Its AI-driven monitoring system tracks data freshness, volume, and schema changes without requiring manual input. The platform’s monitoring agent analyzes patterns and recommends quality checks, saving time for enterprises focused on uptime and root cause analysis.

"Monte Carlo pioneered the data + AI observability category... fundamentally changing how organizations ensure data reliability." - Monte Carlo Team

Monte Carlo integrates with tools like Salesforce and Salesforce Data Cloud, enabling source-level monitoring of Customer 360 profiles. Pricing is customized based on data volume and organizational requirements.

Anomalo

Anomalo uses machine learning to identify data quality issues, detecting hidden patterns and seasonal trends without manual rules. Financial giants like Discover and Nationwide Insurance rely on Anomalo to monitor structured and unstructured data. By building models on historical data, it proactively resolves issues before they affect operations or analytics. Pricing is tailored to the volume and type of data being monitored.

Bigeye

Bigeye focuses on enterprise-grade data observability with robust lineage tracking. It automatically maps data dependencies, helping teams understand how issues in one area can impact dashboards or machine learning models. The platform reduces setup time by analyzing data usage patterns and suggesting quality checks. With custom pricing offered as an annual subscription, Bigeye is ideal for organizations that need detailed lineage insights and automated discovery.

Lightup

Lightup is tailored for marketing analytics teams, offering intuitive real-time monitoring that even non-technical users can manage. Its real-time alerts help teams quickly address unexpected anomalies in campaign data. Lightup connects with data warehouses like Snowflake, BigQuery, and Databricks to ensure comprehensive monitoring of data health.

Talend Data Quality

Talend excels in managing data pipelines across hybrid environments, making it a go-to for enterprises with both cloud and on-premise systems. Retailers and other organizations with complex infrastructures use Talend to clean, transform, and monitor data in real time. Its integration-focused approach simplifies workflows by connecting modern cloud tools with legacy systems, addressing quality issues as they arise.

Metaplane

Known as the "Datadog for data", Metaplane provides quick deployment for modern cloud data warehouses. SaaS and fintech companies like Ramp and Drizly value its low-overhead setup and ability to detect anomalies in dbt models, Snowflake tables, and Looker dashboards. Acquired by Datadog in 2024, Metaplane now offers unified application and data observability. It features a free tier for limited tables, with team plans starting at a few hundred dollars per month. The platform integrates seamlessly with tools like dbt, Snowflake, and Looker, scaling alongside your data needs.

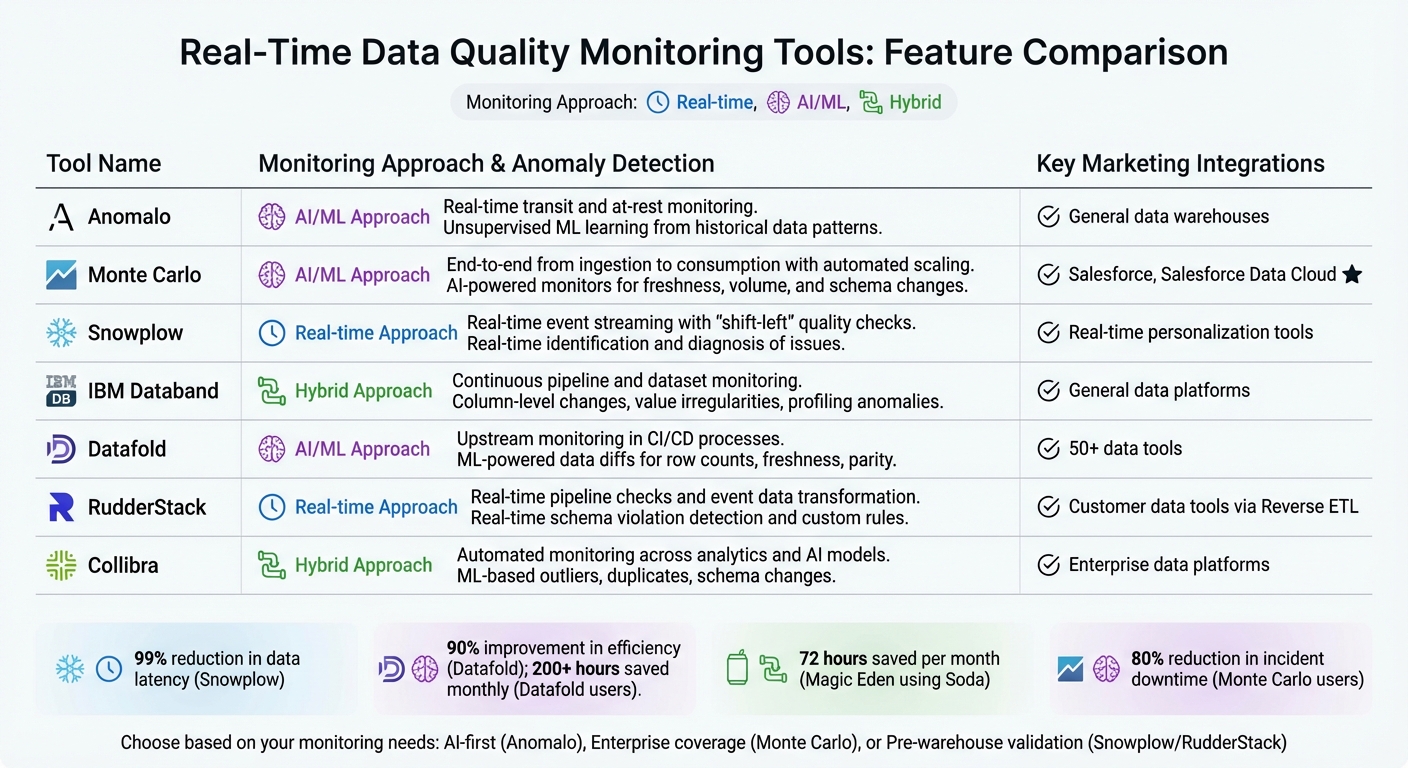

Comparison Table: Real-Time Data Quality Monitoring Tools

Real-Time Data Quality Monitoring Tools Comparison: Features and Integrations

Choosing the right platform depends on where you are in your data monitoring journey and how much automation you require. Below is a comparison of tools based on their monitoring methods, anomaly detection capabilities, and integration options.

| Tool | Real-Time Monitoring Approach | Anomaly Detection Method | Key Marketing Integrations |

|---|---|---|---|

| Anomalo | Real-time transit and at-rest monitoring | Unsupervised ML that automatically learns from historical data patterns | - |

| Monte Carlo | End-to-end monitoring from ingestion to consumption with automated scaling | AI-powered monitors for freshness, volume, and schema changes | Salesforce, Salesforce Data Cloud |

| Snowplow | Real-time event streaming pipelines with "shift-left" quality checks | Real-time identification and diagnosis of data quality issues | Real-time personalization tools |

| IBM Databand | Continuous monitoring of data pipelines and datasets | Alerts on column-level changes, value irregularities, and profiling anomalies | - |

| Datafold | Upstream monitoring integrated into CI/CD processes | ML-powered detection using data diffs for row counts, freshness, and data parity | - |

| RudderStack | Real-time pipeline checks and transformation of event data | Real-time schema violation detection and custom rule enforcement | Customer data tools via Reverse ETL |

| Collibra | Automated monitoring across analytics and AI models | ML-based identification of outliers, duplicates, and schema changes | - |

This table offers a glimpse into how these tools balance real-time monitoring with marketing integration capabilities.

For those prioritizing machine learning that requires minimal manual setup, Anomalo is a strong contender. As Stewart Bond, Research Vice President at IDC, explains:

"Anomalo is differentiated in its AI-first approach to data quality. Anomalo automatically builds ML models for each dataset based on the history, patterns and structure of the data".

On the other hand, Monte Carlo shines with its comprehensive coverage and seamless Salesforce integrations, making it ideal for marketing teams leveraging Customer 360 data.

If your focus is on addressing issues before they reach your data warehouse, tools like Snowplow and RudderStack are worth considering. Snowplow users have reported a 99% reduction in data latency thanks to its real-time delivery capabilities. These tools tackle data quality problems as they occur, rather than notifying you after the fact.

For teams managing database migrations or intricate transformations, Datafold is a practical choice. It enables faster testing and code reviews by automating upstream data quality checks, with users reporting a 90% improvement in efficiency. Meanwhile, Collibra offers an AI-powered rule builder that translates natural language into technical rules, making it accessible for teams without advanced technical skills.

These insights can help you select the right tool to ensure reliable, real-time data quality monitoring within your marketing analytics ecosystem.

How to Choose the Right Tool for Your Marketing Analytics Stack

Picking the right data quality monitoring tool isn't about going for the platform with the most bells and whistles. It's about finding one that aligns with your marketing workflows and budget. The wrong choice can lead to time-consuming maintenance and costly errors, while the right one can save you hours and improve campaign efficiency. Here’s how to narrow down your options.

Define Your Campaign Goals and Data Needs

Start by identifying the most critical gaps in your data quality. For example, if you’re managing campaigns across platforms like Meta, Google Ads, and LinkedIn, maintaining consistent naming conventions is essential. Without automated checks for UTM tags or campaign names, data validation can become a bottleneck, delaying campaign launches.

Decide between two main types of monitoring systems: rule-based checks and AI-driven anomaly detection. Rule-based systems let you define specific queries or validations (like ensuring budget fields aren’t negative). On the other hand, AI-powered tools are better at spotting unexpected issues, such as sudden drops in conversion rates that don’t match historical patterns or seasonal expectations.

"It's everyone's job usually means no one actually does it. Without clear ownership, issues get patched reactively, and it becomes harder to really trust your data".

To avoid this, assign a dedicated person - like a Marketing Ops lead or Analyst - to oversee data quality. Then, pick a tool that matches their technical expertise. Whether they prefer a no-code interface or advanced YAML configurations, the tool should feel manageable from day one.

Check Compatibility with Your Current Tools

Your monitoring tool is only as effective as its ability to integrate with your existing systems. Before committing, confirm that it supports native connectors for both your data warehouse and key marketing platforms. For instance, Datafold provides integrations with more than 50 data tools, enabling seamless monitoring.

Think about whether you need to monitor data "in-flight" as it’s collected (like RudderStack’s event streaming) or "at-rest" once it’s in your warehouse (as offered by Anomalo and Datafold). If your team uses orchestrators like Apache Airflow or dbt, compatibility is crucial to catch errors early - before they affect dashboards or reports.

"Data quality is always a challenge. You have to make sure it's clean and usable by the business. With RudderStack, our data is always ready to be used. The integration is seamless...".

Also, ensure the tool can send alerts directly to platforms your team already uses, like Slack, Teams, or PagerDuty. This way, data issues can be addressed promptly without disrupting your workflow. Lastly, evaluate whether the tool can grow alongside your evolving data needs.

Consider Scalability and Long-Term ROI

Poor data quality costs organizations over $400 million annually. But a monitoring solution shouldn’t add to that burden. Look for tools that simplify setup with bulk configuration options and automated table discovery.

In 2025, John Lee, Director of Product Analytics, shared that his team saved over 200 hours of testing per month and boosted productivity by more than 20% after adopting Datafold. The tool automated code reviews for roughly 100 pull requests each month, ensuring data quality before changes went live.

A tiered monitoring approach can help balance costs. For instance, use low-cost, metadata-based monitoring for your entire warehouse, reserving advanced AI-powered checks for critical datasets - such as those related to revenue attribution or customer lifetime value.

Consider the ease of maintenance, too. Tools with no-code interfaces let non-technical marketers set up validation rules independently, while API or YAML options give engineers the flexibility to manage monitors at scale.

"Discover has been using Anomalo in production for nearly 2 years with flourishing adoption... We are confident that Anomalo will enhance our ability to monitor data quality at scale and with less manual effort".

Finally, prioritize tools that include automated root-cause analysis and data lineage. These features can quickly identify which campaigns or dashboards are affected by data issues, saving hours of manual troubleshooting.

"Anomalo has transformed our data incident response pipeline so we're no longer searching for a needle in a haystack".

Marketing Analytics Tools Directory: Find More Tools for Your Needs

Expanding on the importance of real-time monitoring in maintaining data quality, there's a wealth of tools that can enhance your overall marketing analytics strategy. If you're looking to broaden your toolkit, the Marketing Analytics Tools Directory is a great place to start. It’s designed to help you explore and compare solutions across various categories, including behavioral analytics, pipeline automation, customer data platforms (CDPs), and observability tools.

The directory simplifies your search by organizing tools based on their role in the data lifecycle. Need source-level data validation? It’s got you covered with several options. Looking for downstream monitoring? You’ll find platforms that use machine learning to identify anomalies after data transformation.

You can also refine your search based on technical needs. Whether you prefer no-code interfaces for marketing teams, SQL-based setups for engineers, or on-premises deployment for industries with strict regulations, the directory offers tailored options. It even highlights tools with native connectors for orchestrators like Apache Airflow, transformation tools like dbt, and cloud warehouses such as Snowflake and BigQuery.

The directory goes beyond data quality tools. It features behavioral analytics platforms like Mixpanel and PostHog, data integration solutions capable of automating pipelines from over 500 marketing data sources, and CDPs with more than 1,300 pre-built integrations for seamless omnichannel marketing. With such a wide range, you can build a robust analytics stack that covers everything from real-time user tracking to analyzing cross-channel campaign performance.

Whether you’re managing analytics for a small business or a large enterprise, the directory offers pricing transparency to suit your budget. From free-tier options to custom-priced enterprise solutions, you’ll find the information you need to evaluate ROI and integration compatibility. It’s a one-stop resource to help you refine and complete your marketing analytics stack.

Conclusion

Selecting the right real-time data quality monitoring tool goes beyond simply avoiding technical mishaps - it’s about ensuring your data is trustworthy and empowering your team to make confident, data-driven marketing decisions. With poor data quality costing organizations over $400 million annually and global data volumes expected to surpass 180 zettabytes by 2025, automated monitoring is no longer optional - it’s essential.

The tools highlighted earlier cater to a variety of needs, offering features like AI-driven anomaly detection and upstream monitoring to catch issues before they cascade into larger problems. The key to success lies in choosing a solution that aligns with your unique requirements - whether that’s a no-code interface for marketing teams, a SQL-based platform for data engineers, or compatibility with your existing tech stack and team size.

The benefits of these tools go far beyond error detection. Companies using automated data quality solutions have reported saving over 200 hours of testing per month and cutting data incident downtime by 80% year-over-year. This means your team can focus on optimizing campaigns and boosting revenue instead of firefighting data issues.

To get started, define your campaign objectives and data needs, then identify a tool that integrates smoothly with your analytics setup. Whether you’re running marketing analytics tools for small business or a large enterprise, investing in real-time data monitoring can lead to more reliable insights, quicker decision-making, and stronger marketing results.

FAQs

How do real-time data quality monitoring tools work with existing marketing platforms?

Real-time data quality monitoring tools work by connecting directly to marketing platforms using API connectors and data pipelines. These tools seamlessly integrate with systems like CRMs, advertising platforms, or customer data platforms (CDPs) to check and clean data as it flows into analytics or reporting tools. They handle tasks like schema validation, identifying duplicates, and ensuring data is timely, so only reliable data is used for making decisions.

Some tools take it a step further with in-pipeline data transformation. This allows them to validate, enhance, or even block poor-quality data before it reaches downstream platforms like data warehouses or campaign management tools. By catching issues early in the process, marketers can keep data consistent and trustworthy across all channels, leading to better campaign results and actionable insights.

If you're looking for tools that support these types of integrations, check out the Marketing Analytics Tools Directory. It features options designed specifically for real-time analytics and maintaining data quality.

How does AI-powered anomaly detection improve real-time data quality monitoring?

AI-driven anomaly detection takes real-time data quality monitoring to the next level by automatically spotting issues like outliers, duplicate entries, and unexpected schema changes. This enables businesses to identify and fix problems quickly, reducing downtime and maintaining reliable data accuracy.

By delivering faster, more accurate alerts and offering insights into the root causes of issues, these tools simplify troubleshooting. As a result, marketing teams can make better decisions and ensure their campaigns consistently rely on high-quality data.

How does real-time data monitoring enhance marketing campaign performance?

Real-time data monitoring gives marketers the ability to track key metrics as they unfold, offering instant insights into how campaigns are performing. This means teams can quickly spot issues, such as underperforming segments or unexpected trends, and take immediate action. Instead of waiting for end-of-day reports, they can address problems on the fly or seize new opportunities as they arise.

Automated alerts take this a step further by flagging critical changes - like a sudden drop in click-through rates, a spike in cost-per-acquisition, or data quality concerns - and notifying the right team members. This allows for swift adjustments, whether it’s reallocating budgets, correcting errors, or fine-tuning targeting. The result? Reduced wasted spend, campaigns that stay on track, and better chances of hitting performance goals. These tools empower marketers to make quicker, smarter decisions, ensuring every dollar works harder.